The Crash of the H100 Market

Description: This report examines recent shifts in the GPU market, focusing on changing demand for NVIDIA's H100 amid technological advances, open-source AI models, and market recalibrations.

- META

- Mining

- AI Hardware

- Cerebras

- Groq

- Large Language Model

Abstract

The graphics processing unit (GPU) market has undergone significant changes in recent years, driven by technological advancements in AI, shifting demand patterns, and economic factors. This report will delve into the current state of GPU pricing, focusing on the over-investment in high- end GPUs like the H100, the impact of open- source models, and the subsequent market adjustments.

Introduction

In 2023, the NVIDIA H100 GPU became the go-to choice for training large-scale AI models, with rental prices soaring to as much as $8 per hour. However, by 2024, several key developments rapidly altered this market dynamic.

2023: Start of the AI Hype

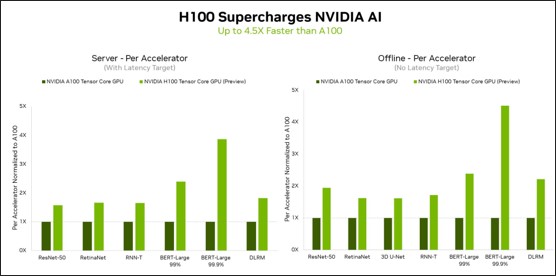

In 2023, the NVIDIA H100 GPU, designed specifically for training large-scale AI models, dominated the market with astronomical rental prices, soaring as high as $8 per hour. For AI startups and data centers alike, the H100 was seen as an essential tool for training cutting-edge models, and the overwhelming demand for AI training fueled both high prices and shortages in availability.

The general sentiment was that this demand would continue to climb, and many believed the price of H100s would remain high. The H100's superior compute power, especially for AI training tasks, and the proliferation of large-scale model training created a market environment where renting H100 GPUs felt like a necessity to stay competitive in the race to develop Artificial General Intelligence (AGI). This demand was bolstered by the capital pouring into AI startups, pushing GPU clusters into a price bubble where the expectation was that H100 pricing would remain elevated for the foreseeable future.

However, that market dynamic underwent a rapid transformation in 2024, as several key events changed the landscape.

2024: The Turning Point

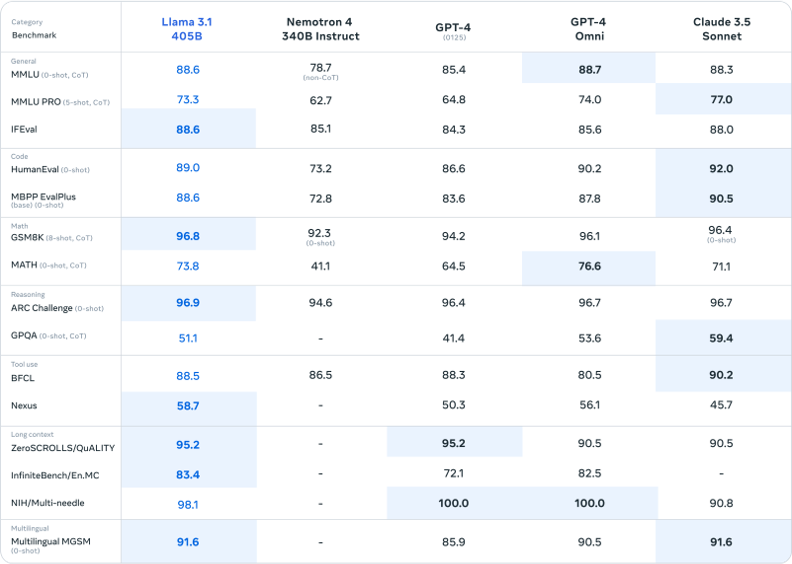

1. LLAMA3.1: Meta Open Sourcing a SOTA 401B Model

A pivotal moment came when Meta open-sourced Llama3.1, a state-of-the-art (SOTA) 400 billion parameter model, giving companies a difficult choice: invest upwards of $100 million in building and training their own large-scale models, or fine-tune Meta's freely available model that showcased comparable performance to GPT's best model. Most companies opted for the latter, seeing the immense cost savings and shorter time to market.

This led to a sharp decline in the demand for training resources. With the availability of Meta's model, it became clear that many organizations no longer needed to invest in expensive GPU time for training from scratch. Instead, fine-tuning existing models, which requires significantly less compute power, became the more attractive and cost-effective route.

Definition of Fine-Tuning

Fine-tuning is the process of taking a pre-trained machine learning model and adapting it to a specific task by training it further on a smaller, task-specific dataset. It allows the model to leverage its existing knowledge while adjusting to new patterns in the target data, making it more effective for the specific application.

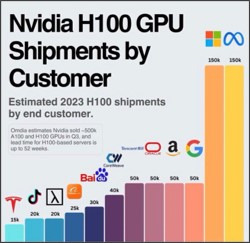

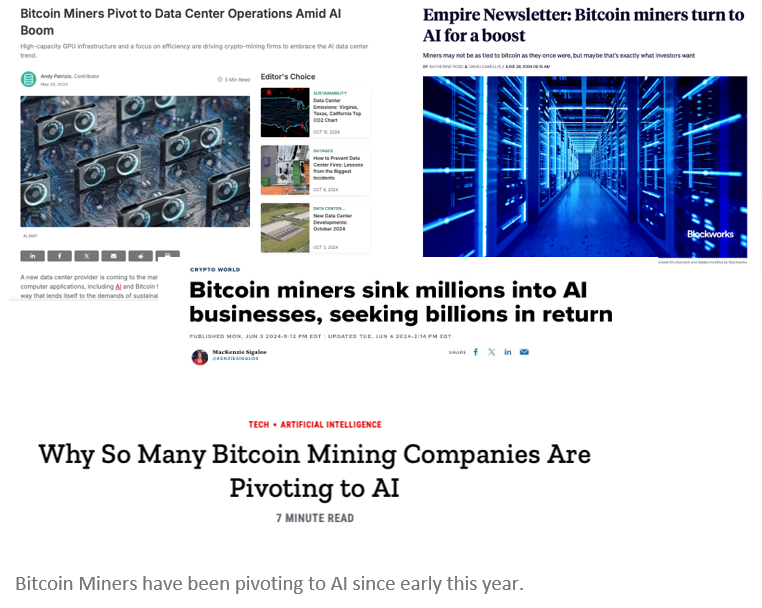

2. Increased Supply of H100s

At the same time, the supply of H100s dramatically increased. Several key factors contributed to this, including better production yields and the fulfillment of large backlogged orders from 2023. This increased availability of GPUs made it easier for companies to acquire allocations, particularly as former mining firms pivoted into the AI space. These companies, now dubbed "Neoclouds," capitalized on the oversupply by entering the market with large H100 clusters just as training demand began to wane. Many of these Neoclouds focused exclusively on H100 SXMs, further adding to the supply glut.

3. Shift to Inference and Cheaper Alternatives

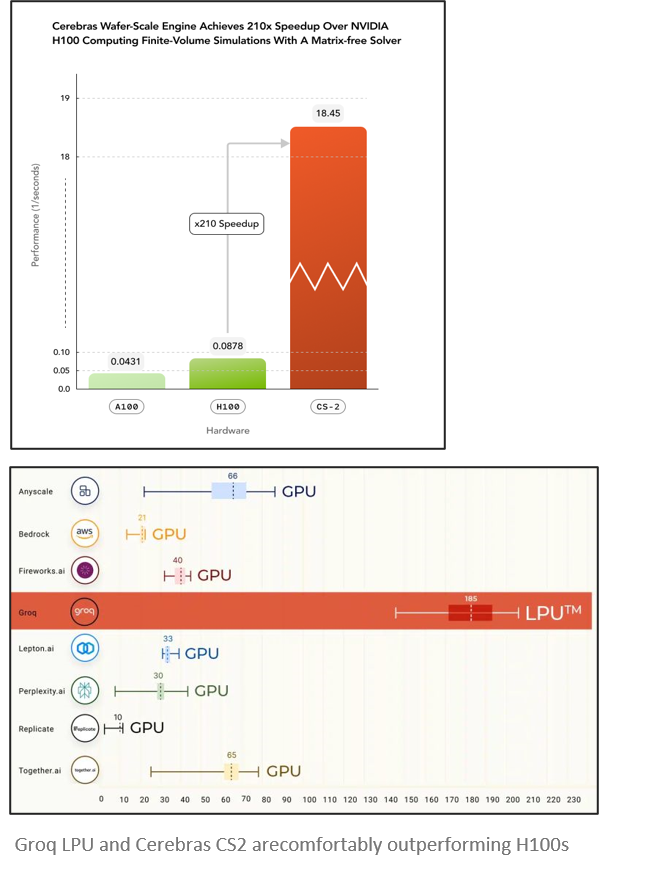

As demand for large-scale training declined, a significant portion of AI activity shifted toward inference, where trained models are used to make predictions and perform tasks in real time. Inference workloads require less GPU power compared to training, and cheaper alternatives to the H100—such as Nvidia's L40 GPUs and specialized inference hardware from companies like Groq, Cerebras, and SambaNova—became the go-to solutions.

These inference-specialized chips offer lower cost per compute for inference tasks, further eroding the demand for H100 GPUs. The market for GPUs was increasingly saturated, and with fewer organizations requiring the expensive H100s for training, prices began to tumble.

Future of H100 Pricing

Looking ahead

- •

AMD and Blackwell Competition: AMD's push into AI and Nvidia's upcoming Blackwell GPUs will increase competition. Blackwell is expected to outperform and underprice the H100, likely driving down H100 prices as customers upgrade.

- •

Inference Hardware: The rise of inference-specialized chips is reducing demand for H100s, as companies prefer more affordable, inference-optimized hardware.

- •

Oversupply and Price Drops: An oversupply of H100s, combined with competition and new alternatives, will likely push rental prices below $2 per hour, especially as Neoclouds expand.